EMOPortraits: Emotion-enhanced Multimodal One-shot Head Avatars

Abstract

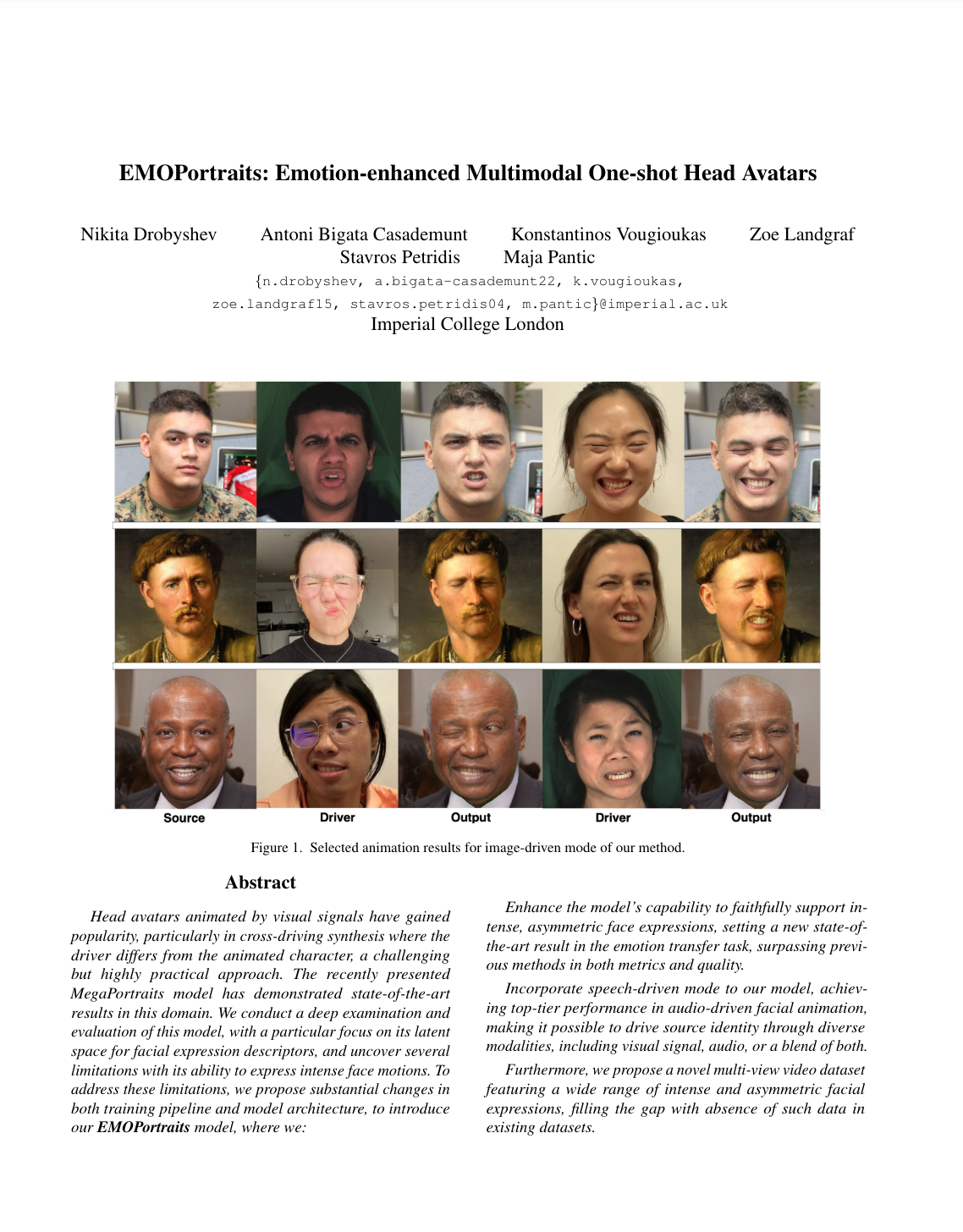

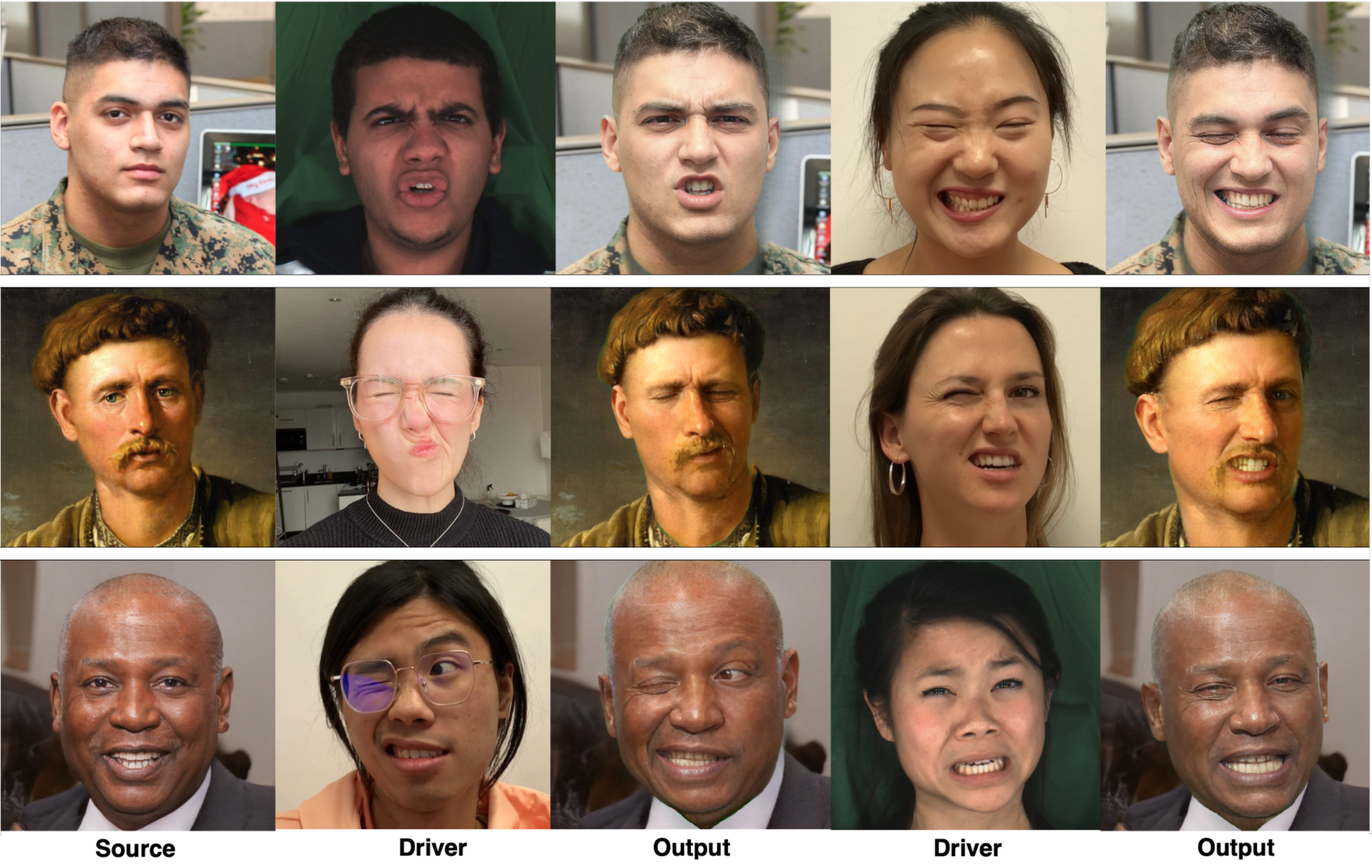

Head avatars animated by visual signals are increasingly popular in scenarios where the animator and character differ, a challenging yet practical approach. Our analysis revealed limitations in the existing model's handling of intense facial movements. To address this, we introduced the EMOPortraits model, which significantly improves the realism of intense and asymmetric expressions, achieving top results in emotion transfer. We also added a speech-driven mode to enhance audio-visual facial animation. Additionally, we created a unique multi-view video dataset to better represent intense and varied expressions, addressing a notable gap in current datasets.

We propose a method to instantly create high-resolution human avatars through a two-stage training process, with an optional audio-driven phase for video generation from a single image and audio input. Our standard training approach involves selecting two random frames—source and driver—from our dataset at each step. The model adapts the driver frame's motion and expressions onto the source frame to generate the final image. Effective learning is achieved when both frames originate from the same video, enhancing the model's accuracy in matching the driver frame.

Main scheme

Speech driven examples

BibTeX

@misc{drobyshev2024emoportraits,

title={EMOPortraits: Emotion-enhanced Multimodal One-shot Head Avatars},

author={Nikita Drobyshev and Antoni Bigata Casademunt and Konstantinos Vougioukas and Zoe Landgraf and Stavros Petridis and Maja Pantic},

year={2024},

eprint={2404.19110},

archivePrefix={arXiv},

primaryClass={cs.CV}

}